Processing Video, to do Face Recognition with Go and Python

UPDATE: We officially support video right now, using Videobox, so you don’t have to extract frames yourself to use Machine Box, Videobox can do it for you. This blog post is now informative, if you want to do it yourself.

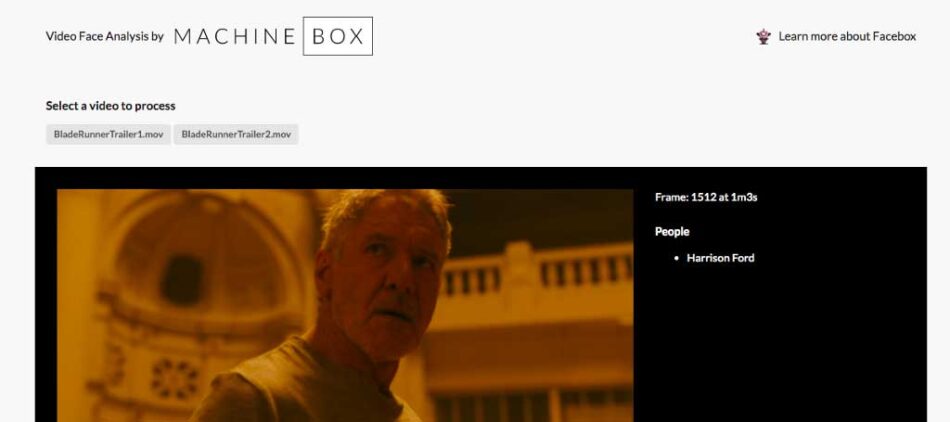

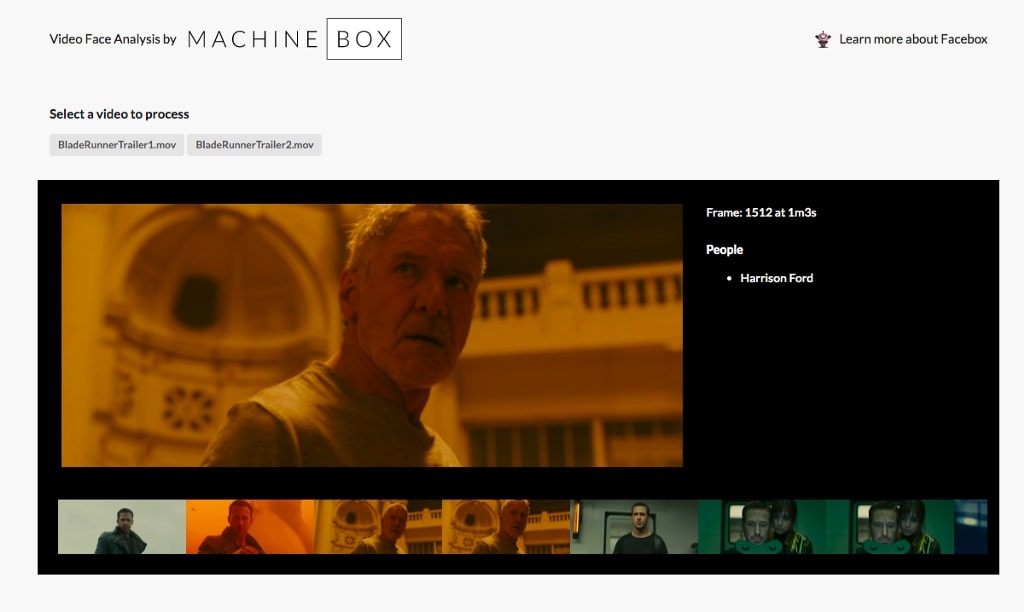

If you have lots of video content but don’t know who appears in them, you can quickly put together a tool that will automatically detect and recognise people using Go, Python and Facebox.

Previously we showed you how to do face recognition on a webcam stream, now we are going to process video with a little Go web app and see the results of face recognition live in the browser.

https://medium.com/media/7bfa8e1491012eaf2c0be40692c8bdd1/href

Implementing Video Pipelines

One of the questions that we get from customers, is whether we are going to support video in the boxes or not, for example facial recognition with Facebox.

Supporting video is on the roadmap of Machine Box, but the problem is video pipelines could be very complex, and different people have different requirements, so instead of supporting video right now, we’ve decided to open source examples to show you how you can implement video pipelines, and integrate them with the current boxes offered by Machine Box.

Extract Video Frames with Python and OpenCV

The first thing we have to do is to open the video file and extract the frames to process, and we are going to use Python and OpenCV. It’s quite easy to do, and we can sample the frames, because we probably don’t want read every single frame of the video. One frame per second should be enough to do face recognition.

Here’s the Python code:

# video.py def videoStreamer(path, width=600, height=None, skip=None): # capture the video stream = cv2.VideoCapture(path) frames = int(stream.get(cv2.CAP_PROP_FRAME_COUNT)) FPS = stream.get(cv2.CAP_PROP_FPS)

if skip == None:

skip = int(FPS)while True:

# skips some some of the frames, and read one

for i in range(skip):

stream.grab()

(grabbed, frame) = stream.read()

if not grabbed:

return

# resize the frame and send it to stdout on JSON format

frame = imutils.resize(frame, width=width, height=height)

f = stream.get(cv2.CAP_PROP_POS_FRAMES)

t = stream.get(cv2.CAP_PROP_POS_MSEC)

res = bytearray(cv2.imencode(".jpeg", frame)[1]) obj = {"frame": int(f),

"millis": int(t),

"total": frames,

"image": base64.b64encode(res)} sys.stdout.write(json.dumps(obj))

sys.stdout.write("n")The main idea of this script is to open the video, and at a configurable frame rate, get the frame info and the frame image in base64 encoded as a JSON, and print it to the standard output.

The complete code can be found in video.py on the project in Github.

Video pipeline with Go and HTTP handlers

Once we are able to extract frames and pipe them to the standard output, we can use Go to manage the Python command execution, send the frame to Facebox for the recognition, and report back to the browser using the new EventSource browser APIs, to stream the video processing progress in realtime.

Executing Video python script with Go

We are going to use Go to manage the lifecycle of the Python script to extract video. For that we can use the excellent exec.CommandContext where we can pass parameters and using the context.Context from the http.Request, we can cancel the execution, at any time.

After the execution we can read stdout and decode the JSON from the command, here the Go code snippet.

// server.go

flags := []string{"--path", path.Join(s.videos, filename), "--json", "True"}

cmd := exec.CommandContext(r.Context(), "./video.py", flags...)stdout, err := cmd.StdoutPipe()

if err != nil {

// omitted error handling

}dec := json.NewDecoder(stdout)

for {

var f Frame

err := dec.Decode(&f)

// process the frame, for example call facebox and nudebox

// todo face recognition and adult content detection

...} // wait for the command to finish cmd.Wait()

Calling Facebox to do face recognition

Once we have the frame in memory we can allow any kind of workflow. In this case, we send the frame to Facebox to perform the face recognition.

I previously did the teaching on facebox about the celebrities that I was interested, that was very easy using the developers console.

With the Go SDK is very easy to do it, in other languages you can just use a simple HTTP call to the box.

// server.go

imgDec, err := base64.StdEncoding.DecodeString(f.Image)

if err != nil {

// omitted error handling

}// send the frame to Facebox to do Face Recognition faces, err := s.facebox.Check(bytes.NewReader(imgDec)) thumbnail = nil total = f.Total

// check if facebox found any faces

for _, face := range faces {

if face.Matched {

thumbnail = &f.Image

}

}Since you have the frame in memory, you could easily send it to multiple Machine Box boxes and do adult content detection with nudebox, to avoid having to process the video twice.

Another option is to save the JSON response from Facebox, together with the frame and video data, into a database or a search engine like Elastic Search, so you can perform queries and search for your videos.

Server Sent Events to report back to the browser

We want the user experience to be real time in the browser and Server Sent Events are a great way to achieve this. In Go it’s very straightforward, we only need to set the right headers, like here:

// server.go

func (s *Server) check(w http.ResponseWriter, r *http.Request) { // sent the headers for Server Sent Events

w.Header().Set("Content-Type", "text/event-stream")

w.Header().Set("Cache-Control", "no-cache")

w.Header().Set("Connection", "keep-alive")// one encoder to write the Events enc := json.NewEncoder(w)

And every time that we need to send something to the browser we only need to send a message with data: as a prefix and two end on the lines nn to tell that you finish with that event, for example:

data: { "hello": "world" } nnSo with a little Go function we can send as many events as we want:

// server.go

func SendEvent(w http.ResponseWriter, enc *json.Encoder,

v interface{}) { w.Write([]byte("data: "))

if err := enc.Encode(v); err != nil {

// omitted error handling

}

w.Write([]byte("nn"))

if f, ok := w.(http.Flusher); ok {

f.Flush()

}

}In the browser we can process every event with a few lines of Javascript.

// app.js

var es = new EventSource($this.attr('href'))

es.onmessage = function(e){

var obj = JSON.parse(e.data)

if (obj.thumbnail) {

frameData[obj.frame] = obj

frames.append(...)

}

...

}

Here the complete code of the http.Handler:

// server.go

func (s *Server) check(w http.ResponseWriter, r *http.Request) {var thumbnail *string

// sent the headers for Server Sent Events

w.Header().Set("Content-Type", "text/event-stream")

w.Header().Set("Cache-Control", "no-cache")

w.Header().Set("Connection", "keep-alive")

enc := json.NewEncoder(w)// starts the video processing script

filename := r.URL.Query().Get("name")

flags := []string{"--path", path.Join(s.videos, filename), "--json", "True"}

cmd := exec.CommandContext(r.Context(), "./video.py", flags...)stdout, err := cmd.StdoutPipe()

if err != nil {

// omitted error handling

}cmd.Start()

total := 0

dec := json.NewDecoder(stdout)

for {

var f Frame

err := dec.Decode(&f)

if err == io.EOF {

break

}

if err != nil {

// omitted error handling

}

imgDec, err := base64.StdEncoding.DecodeString(f.Image)

if err != nil {

// omitted error handling

}// send the frame to Facebox to do Face Recognition faces, err := s.facebox.Check(bytes.NewReader(imgDec)) thumbnail = nil total = f.Total

for _, face := range faces {

if face.Matched {

thumbnail = &f.Image

}

} SendEvent(w, enc, VideoData{

Frame: f.Frame,

TotalFrames: f.Total,

Seconds: (time.Duration(f.Millis/1000)*time.Second).String(),

Complete: false,

Faces: faces,

Thumbnail: thumbnail,

})} cmd.Wait()

// tell the browser the we finished

SendEvent(w, enc, VideoData{

Frame: total,

TotalFrames: total,

Complete: true,

})}

Conclusion

You can use Go and Python to implement really powerful video pipelines that include Face Recognition, and many other features and possibilities.

- Create an account on machinebox.io to be able to use Facebox on your pipeline.

- Check out the full code example to implement your own pipeline on github: https://github.com/machinebox/videoanalysis

Processing Video, to do Face Recognition with Go and Python was originally published in Machine Box on Medium, where people are continuing the conversation by highlighting and responding to this story.