Train a spam detector with a 97% accuracy with Machine Box

Let’s face it, a lot of people out there are needing to integrate machine learning into their products and services, but don’t have the time or resources to go back to school to learn Tensorflow or the money to hire expensive PhDs. And even if they do, its still a huge challenge to get it working right, then deploy it into production and support it forever after.

This is where Machine Box comes in. We make tools that greatly simplify the whole machine learning process from idea to production.

Because of the simplicity of the tools, the challenge in machine learning is now only about having the right data set. Traditionally, you’d need thousands of examples of something in order to train an effective machine learning model, but we’ve built in some tech to reduce that significantly (in some cases you only need 1 example).

I’m going to share with you an example of a machine learning model I built in about 10 minutes:

Spam Detector

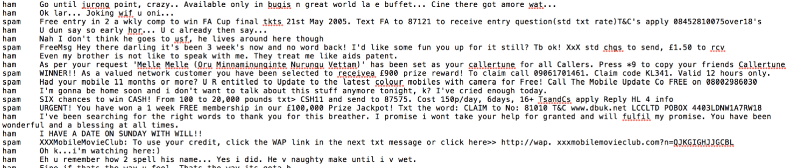

Nobody likes spam. Fortunately, there are some good datasets of spam online that you can download, like this one. This dataset is a list of spam SMS messages, as well as a list of non-spam (or ham) SMS messages to compare it to. This dataset is ideal for our purposes because it is labeled, but we need to do a little cleaning first.

The first thing you need to do is install Classificationbox. It is a machine learning model builder inside a Docker container, so it runs locally on your machine and never sends anything to the internet.

Once you have that installed, grab this handy open source tool called Textclass which automates some of the training processing within Classificationbox saving you a lot of time.

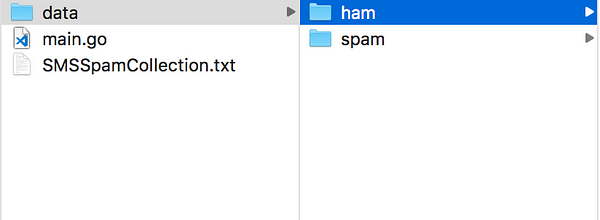

Now, back to our dataset. In order to use Textclass, you need each example to be a text file in a folder. So start by creating two folders, one called ham and one called spam. Then, run a script like this one (in Go) that strips out each example and puts it into a text file in the appropriate folder.

| package main | |

| import ( | |

| “bufio” | |

| “fmt” | |

| “log” | |

| “os” | |

| “strconv” | |

| “strings” | |

| ) | |

| func main() { | |

| if err := run(); err != nil { | |

| log.Fatalln(err) | |

| } | |

| } | |

| func run() error { | |

| log.Println(“running”) | |

| r, err := os.Open(“SMSSpamCollection.txt”) | |

| if err != nil { | |

| return err | |

| } | |

| defer r.Close() | |

| s := bufio.NewScanner(r) | |

| counter := 0 | |

| for s.Scan() { | |

| if err := s.Err(); err != nil { | |

| return err | |

| } | |

| subs := strings.Split(s.Text(), “\t”) | |

| if len(subs) < 2 { | |

| continue | |

| } | |

| category := subs[0] | |

| text := subs[1] | |

| filename := strconv.Itoa(counter) | |

| if category == “spam” || category == “ham” { | |

| createFile(category, text, filename) | |

| counter++ | |

| log.Printf(“Content: %v – Category %v”, counter, category) | |

| } | |

| } | |

| return nil | |

| } | |

| func createFile(category, text, filename string) error { | |

| file, err := os.Create(“data/” + category + “/” + filename + “.txt”) | |

| if err != nil { | |

| log.Fatal(“Cannot create file”, err) | |

| } | |

| defer file.Close() | |

| fmt.Fprintf(file, text) | |

| if err != nil { | |

| return err | |

| } | |

| return nil | |

| } |

Looking at it, we quickly see that there are a lot more examples of ham than there are of spam. For good classification, we need to have the exact same number of examples in each class. We call this class balancing.

I manually deleted the excess examples so it matched the number of examples in spam. There are better ways to do this with Terminal commands and like the I’m sure.

Now its time to build our model.

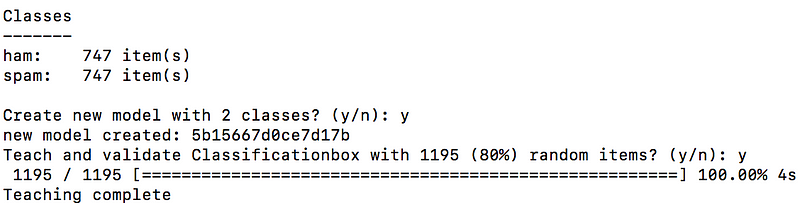

Simply open Terminal, run the Textclass script and point it to the directory with the two folders in it.

$ go run main.go -src /Path/to/data/smsspam/data

In a few seconds, it will teach the model the examples and begin a validation step. After which, you’ll get an accuracy percentage, which in this case was 97.32441471571906%

That’s really good!

Best practices

If you’re not going to use Textclass and you want to interact with Classificationbox through its API, there are some things you should keep in mind. First, always pick 80% of your training data at random and use that to actually train the model. Keep the 20% that remains as a validation set to use later. When you train with the 80%, you must alternate examples in each class so that the model doesn’t develop a bias. For example, send training requests like spam/example1, ham/example1, spam/example2, hame/example2 etc…