Boost your face recognition accuracy with this quick step

I admit, we’ve made it almost too easy to deploy a state-of-the-art face recognition machine learning model. So easy in fact, the only thing you need to know to try it for yourself is how to copy and paste commands into Terminal (and how to open a web browser).

It’s great to make things so simple, but there are still some basic machine learning principles you’ll need to keep in mind in order to get the most out of such tools. One of the most common mistakes I see people make when they’re trying to train their face recognition models is to focus too much on the right algorithm, and too little on the right training data.

Let’s take a simple, yet very common, use case for face recognition; finding celebrities in media content. Facebox, for example, makes it easy to find a photo of your celebrity, teach the model the face with a single image, and then start running it against all of your media.

Running Facebox against lots of video files does require some extra tools and workflow. For example, Machine Box’s parent company Veritone has a platform that sorts those kinds of things for production and scale.

That will work well in certain circumstances, but you’re going to see a lot of false negatives (instances where the celebrity wasn’t detected in video) as well.

How does one avoid this? Fortunately, the answer is also pretty simple. Use a photo FROM the video you want to recognize the person in.

For example, we could try and use this well-lit, professional photo of Keanu Reeves from the internet to try and find him in all of our thousands of hours of Late Night with Jimmy Fallon clips, and we’ll certainly get some positive hits.

But if we really want to dial-up the accuracy, we’ll also throw in this gem of a freeze frame of Keanu mid sentence.

When I ran a video from Jimmy Fallon’s late night through Videobox (another nifty tool) which was trained on the single, professional photo of Keanu Reeves above, it found him throughout the video, but a lot of the results had confidence scores around 50–65%. This was the only instance above 65%:

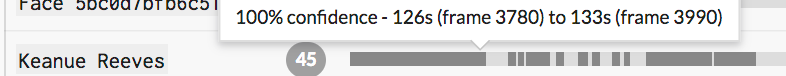

When I ran the same video again, after teaching Facebox a freeze frame from the video itself, we not only increased the confidence (up to 100% in some places), but we also found him in many more places in the video.

This gets even more important when you’re training with hundreds of different celebrities, and running the model against thousands of hours of video.

Is it going to be 100% accurate every time? Of course not. But the amount of time you’ll save yourself hunting for clips of Keanu Reeves in your collection will be significantly reduced.

By the way, do you know how to spot a deep fake? Look for the absence of blinking. Not having blinking is an artifact of only using training images of people with their eyes open. I’m not super excited to teach you how to make better deep fake videos, but it is an interesting case about training data and accuracy. There’s a great podcast on this subject on Data Skeptic.

Boost your face recognition accuracy with this quick step was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.