Use object recognition to make sure your car hasn’t been stolen

What? What a strange title for a blog post. That is like saying “Don’t not use your telephone to avoid not making phone calls”. But I think it accurately describes the way in which I’m going to show you how to use machine learning to make sure your car is still in your driveway.

Several years ago, someone decided to waltz into my driveway and steal my car in the middle of the night. It wasn’t a pleasant experience, and it of course had me thinking about clever ways to prevent this from ever happening again.

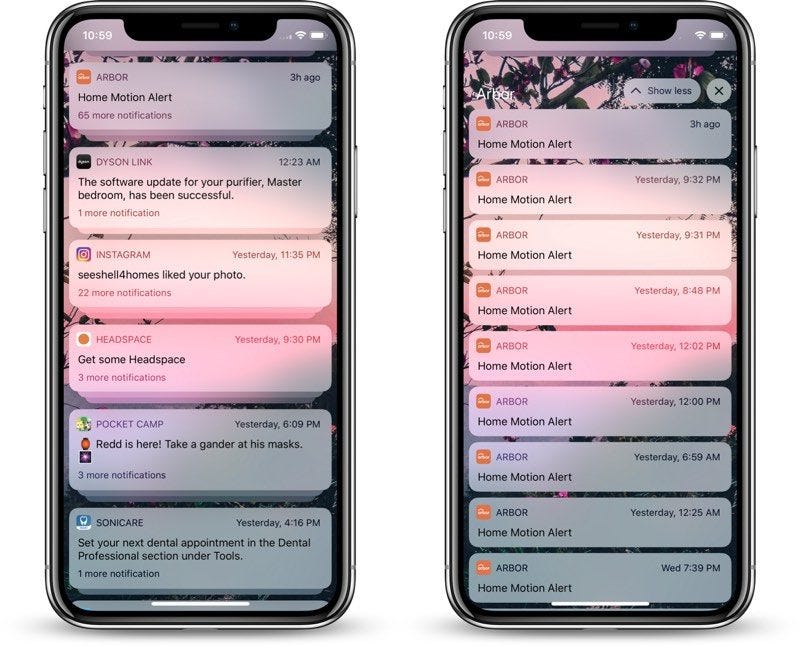

One way was to install security cameras everywhere, and then set them to alert me if there is any movement. But, as I’m sure you can guess, so many little things set off motion detection that the notifications quickly became noise, which I then ignored, defeating the purpose of getting notifications in the first place.

But what if I could tell you that you can set up a system that ONLY alerts you if your car is missing, and won’t alert you about anything else ever.

Well… I can tell you that… so… I’m telling that to you now.

How?

Magic.

And by magic, I mean technology that is relatively straightforward and is based upon very solid math such as 2+2=4, 6/2=3, and triangles=interesting.

Obviously, the first thing you’re going to need is a camera. And the next thing you’re going to need is to setup some DIY home automation. I recommend having a look at these guys. They’ve got a lot of open source home automation stuff you can setup really easily to enable a workflow like the following.

I’d say that this is for the technically inclined. You probably should be a developer, or at least play one on TV.

Now, get yourself some training data. Namely, some video files of your car parked where it is usually parked.

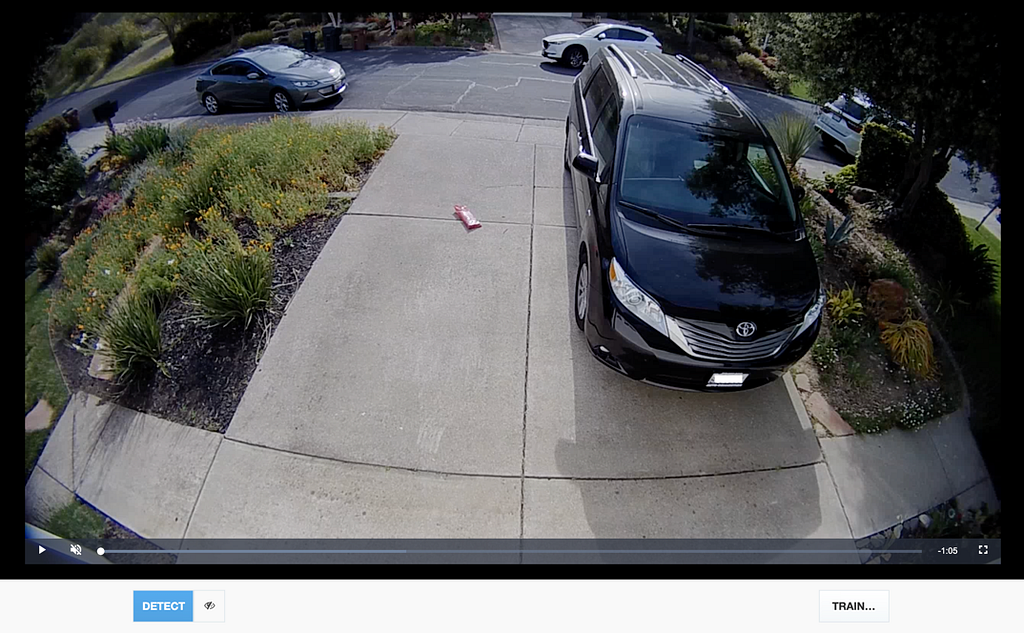

In my case, it looks like this.

Notice the recycling bins in the background? My recommendation is to get video examples from throughout the week, with different lighting, different ‘scenarios’ on the street such as cars, bins, animals, ghosts, whatever else is common. In my case, I need video with and without those bins.

Now comes the fun part.

Go ahead and download this nifty tool from Machine Box called Objectbox.

Annotate

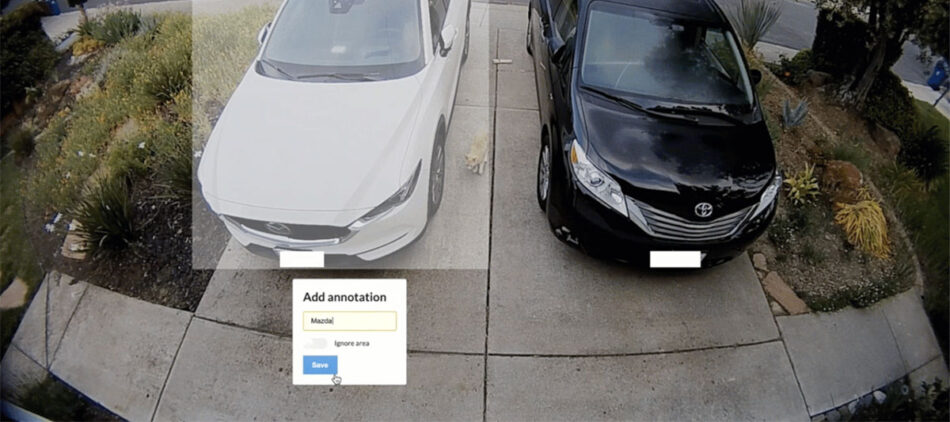

Follow the instructions to get yourself setup with the annotation tool in Objectbox, and place your videos into the boxdata/files directory.

Then open up the annotation app by visiting https://localhost:8080 clicking on Annotation Tool, and selecting one of your example videos. Play the video, then pause it, and draw a box around your car. Give it a label that makes sense, play the video again, hit pause again a few seconds later and draw another box around your car. Rinse and repeat 2 or 3 times per video.

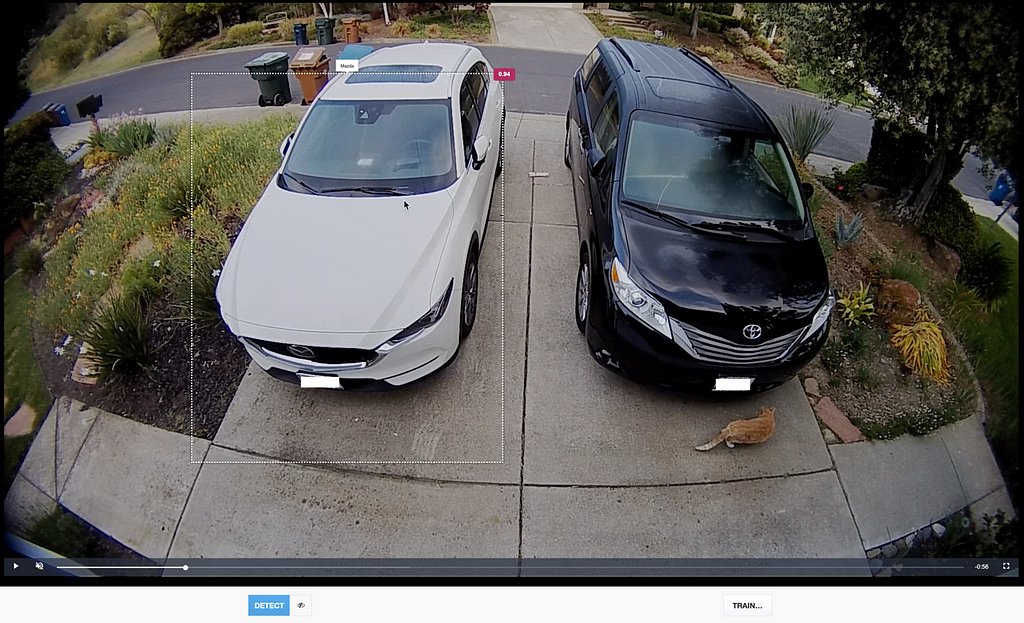

My recommendation is to grab about 20 examples across as many different scenarios as possible. For example, you see that dumb cat next to my amazing car? I made sure it was included in the box this time, but not for subsequent annotations. I want the model to learn what is the same every time versus what is different. Objectbox takes the entire frame into context, so you really do need real life examples at different times of day and different days of the week to get the best results.

How did we do?

My favorite thing about Objectbox (because I was the product manager of it and asked for it) is the ability to immediately get feedback on your training. Have you provided enough examples? Did you do it right? Great questions. Objectbox gives you the answer by letting you click ‘train’ then playing and pausing the video on a frame. It will run the training against that frame and give you an answer right then and there.

For example, here is a new frame that Objectbox has never seen before.

Nailed it! Now, let’s see what happens when there is some video where there is no delicious Mazda present:

Perfect — no detections.

So here is what I would do to setup my pleasedontstealmycar™ workflow:

- Download the state file (training) from Objectbox (after checking it on a few more videos).

- Build a periodic check of my camera to download a frame, POST it to Objectbox running with my state file, which will check to see if Mazda=true.

- If Mazda=stolen, send alert to my phone and also the national guard.

- Probably schedule this to not alert you when you’re expecting the Mazda to be somewhere else.

This should seriously reduce the amount of notifications you get from Arlo, Ring, Nest etc. etc. etc. And only alert you when something that should be there isn’t.

What else can you do with Objectbox? Open your garage when you pull into the driveway, alert you when your mail has arrived, notify you if your recycling bins aren’t on the street because you forgot because you’ve been busy doing cool stuff… I’m sure you can think of many great use cases.

Taking it to the next level

If you’re a big facility or a small office, and you want to start thinking about using machine learning to make more relevant alerts, take a look at this. Using aiWARE, a platform by Veritone (who acquired Machine Box), you can start to think about doing this kind of thing at scale.

Simply upload your state files to Veritone’s Library application, then use Objectbox to run against all of your camera feeds. You don’t have to worry about orchestrating Docker containers or managing the processing of videos. You don’t even need to do much to get notifications working, aiWARE has a custom Node-RED implementation that lets you easily process workflows like this without doing much coding.

Use object recognition to make sure your car hasn’t been stolen was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.