Scanning Retinas for Heart Disease with AI Prediction

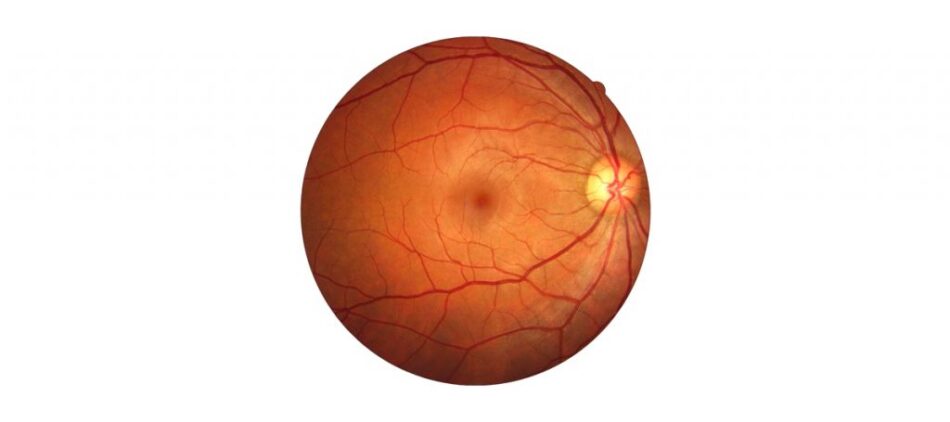

Researchers have devised a deep-learning algorithm that uses AI prediction techniques to forecast cardiovascular risk based on scans of patients’ retinas.

A team of researchers from Google, Verily Life Sciences and the Stanford School of Medicine trained their deep-learning algorithm using data from 284,335 patients. The algorithms were able to predict cardiovascular (CV) issues with “surprisingly high accuracy” for patients by looking for risk factors including diabetes, smoking, and high blood pressure.

Based on the scans, the algorithm could differentiate the retinal images of smokers versus non-smokers with 71 percent accuracy, Google noted in a blog post. Although doctors can tell whether patients have acute high blood pressure by looking at their retinal images, the algorithm can actually predict systolic blood pressure levels for every patient.

“In addition to predicting the various risk factors (age, gender, smoking, blood pressure, etc) from retinal images, our algorithm was fairly accurate at predicting the risk of a CV event directly,” Google stated. “Our algorithm used the entire image to quantify the association between the image and the risk of heart attack or stroke. Given the retinal image of one patient who (up to 5 years) later experienced a major CV event (such as a heart attack) and the image of another patient who did not, our algorithm could pick out the patient who had the CV event 70 percent of the time. This performance approaches the accuracy of other CV risk calculators that require a blood draw to measure cholesterol.”

Even more significantly, the researchers have been able to observe how the algorithm was able to make AI predictions. By doing this, the researchers could produce a heatmap that showed which pixels in a retinal scan were most indicative for predicting specific CV risk factors.

“For example, the algorithm paid more attention to blood vessels for making predictions about blood pressure,” Google wrote. “Explaining how the algorithm is making its prediction gives doctors more confidence in the algorithm itself. In addition, this technique could help generate hypotheses for future scientific investigations into CV risk and the retina.”

Google said the techniques it has developed represent a new method of scientific discovery.

“Traditionally, medical discoveries are often made through a sophisticated form of guess and test — making hypotheses from observations and then designing and running experiments to test the hypotheses,” the company wrote. “However, with medical images, observing and quantifying associations can be difficult because of the wide variety of features, patterns, colors, values and shapes that are present in real images. Our approach uses deep learning to draw connections between changes in the human anatomy and disease, akin to how doctors learn to associate signs and symptoms with the diagnosis of a new disease. This could help scientists generate more targeted hypotheses and drive a wide range of future research.”

Stephan Cunningham is vice president, product management at Veritone. Working in concert with core internal teams including industry-specific general managers and engineering as well as directly with clients and prospects, he leads the disciplines and business processes which govern the Veritone aiWARE platform.